Why Demonizing AI Misses the Point

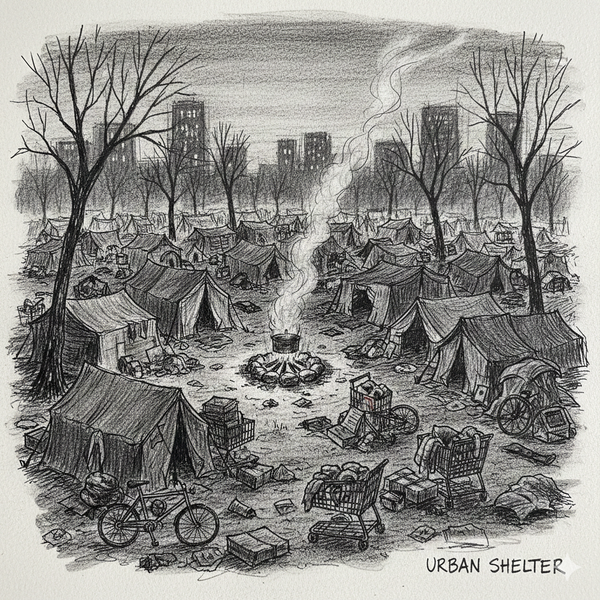

By Chelsea Lynn Wooton Artificial intelligence has become an easy villain — not because it has failed us, but because it has exposed us. It has revealed how deeply we tie worth to struggle, intelligence to gatekeeping, and morality to who gets left behind. AI is blamed for cheating, laziness, job loss, and the erosion of “real” intelligence. But this backlash says far more about human fear than it does about the technology itself. AI is not a moral failure. It is a tool — and for many people, it is an accessibility aid, an equalizer, and a means of participation that was previously denied. It’s blamed for cheating, laziness, job loss, and the erosion of “real” intelligence. But this backlash says far more about human fear than it does about the technology itself. AI is not a moral failure. It is a tool — and for many people, it’s a lifeline. The stigma surrounding AI use isn’t rooted in evidence. It’s rooted in psychology. 1. Identity Threat: When Intelligence Feels Personal For generations, intelligence, creativity, and expertise have been treated not just as skills, but as moral currency — proof of worth, diligence, and legitimacy. When AI demonstrates capabilities we once believed defined us, it destabilizes more than jobs. It destabilizes identity. Instead of asking how tools can expand human capacity, some respond by condemning the tool itself. This isn’t a reasoned ethical stance; it’s an ego defense. But tools have always extended human ability. Writing did not make memory immoral. Calculators did not make math dishonest. Spellcheck did not make literacy fraudulent. AI does not diminish human intelligence — it reveals how much of intelligence has always been collaborative, cumulative, and assisted. 2. Fear of Status Loss Many systems of power rely on scarcity: access to education, polished language, technical skill, time, and physical endurance. AI reduces those barriers. When knowledge and competence become more accessible, people who benefited from gatekeeping often experience that shift as a threat. The reflex is predictable: redefine legitimacy so that fewer people qualify. Calling AI “cheating” is often an attempt to preserve hierarchy, not integrity. But progress has never come from protecting ladders — it comes from widening them. 3. Moralization as a Defense Mechanism When people feel overwhelmed, behind, or uncertain, fear often disguises itself as morality. Instead of saying, “I’m afraid of becoming irrelevant,” it becomes, “This is unethical.” Instead of admitting confusion, people reach for condemnation. This moral panic isn’t new. It’s a common psychological response to rapid change. But conflating discomfort with wrongdoing doesn’t protect values — it weaponizes them. Judgment becomes easier than adaptation. This moral panic isn’t new. It’s how humans cope with rapid change. But conflating discomfort with wrongdoing only delays adaptation — and harms those who benefit most from new tools. 4. Cognitive Dissonance and the Myth of Suffering We are taught that effort equals virtue and struggle equals legitimacy. AI disrupts that story. If something becomes easier, many assume it must be wrong — because acknowledging otherwise would mean admitting that much of our suffering was never morally necessary. But reducing unnecessary friction is not moral decay; it is the entire point of progress. We don’t romanticize washing clothes by hand or memorizing encyclopedias. We recognized that liberation matters. Cognitive labor should not be the lone exception. 5. The Historical Pattern We Keep Repeating Every transformative technology is first demonized. The printing press was accused of destroying memory. Calculators were said to ruin math. The internet was framed as the end of critical thinking. Wikipedia was dismissed as illegitimate. AI feels different only because it mirrors cognition itself. It doesn’t just change what we do — it challenges how we define thinking, authorship, and value. Discomfort is not insight. It is simply the first stage of adjustment. 6. Projection and Scapegoating Criticism of AI users often masks personal fear: fear of exposure, displacement, or obsolescence. Instead of processing those fears honestly, they are projected outward. Shaming people for using AI doesn’t defend standards. It defends self-image. Scapegoating is easier than reckoning with change. 7. Control Anxiety and the Fear of the Unseen Humans distrust systems they can’t easily see or predict. AI feels opaque, fast, and emotionally unfamiliar — which triggers a primal discomfort. But unfamiliarity is not danger. Rejecting AI because it feels unsettling is no different from rejecting literacy because it once felt unnatural. Who AI Actually Helps AI is especially powerful for people who have been historically excluded: Disabled individuals managing fatigue, brain fog, or cognitive load Single parents balancing time scarcity People without access to elite education Neurodivergent thinkers who process differently Those rebuilding after illness, trauma, or poverty For many, AI doesn’t replace thinking — it makes thinking possible. When Stigma Becomes Personal I am not writing this from a theoretical distance. I am a person living with hand and food neuropathy. I experience pain, numbness, and functional limits that directly affect how I write, cook, and work — the very skills people insist must remain "pure" to count as legitimate. I have been personally demonized for using AI as an accessibility tool. Not because I lied. Not because I misrepresented my work. But because I refused to suffer performatively. What critics often ignore is this: using AI does not erase my thinking. It preserves it. It allows me to communicate clearly on days when my hands don’t cooperate. It allows me to plan, organize, and express ideas when pain would otherwise silence me. When people shame disabled individuals for using assistive tools — whether wheelchairs, screen readers, or AI — they aren’t defending integrity. They are enforcing a hierarchy that rewards endurance over honesty and exclusion over equity. Demonization in these cases isn’t about ethics at all. It’s about control: who gets to decide what "counts" as real work, real intelligence, or real contribution. If your definition of integrity requires someone to hurt more than necessary, it isn’t integrity. It’s cruelty. The Real Ethical Question The ethical question is not whether people should use AI. It’s whether we will allow stigma to determine who gets access to tools that improve quality of life. Demonizing AI use doesn’t protect humanity. It protects outdated hierarchies — ones that quietly exclude disabled people, caregivers, and anyone whose body or circumstances don’t allow constant friction. A healthier response is curiosity over condemnation, adaptation over denial, and ethics grounded in understanding rather than fear. AI is not the enemy. Refusing to evolve — and refusing to include — is. Progress has never come from shaming people for using tools. It comes from learning how to use them wisely, ethically, and together.